One of the challenges of frame interpolation is to handle complex motions and occlusions in the scene. Traditional methods often produce artifacts such as blurring, ghosting, or flickering. To overcome these limitations, Google Research has developed a novel approach based on deep learning and optical flow.

The approach consists of two steps: first, an optical flow network estimates the motion vectors between two input frames; second, a pixel synthesis network generates the intermediate frame by warping and blending the input frames according to the motion vectors. The optical flow network is trained with a self-supervised loss that does not require ground-truth motion labels. The pixel synthesis network is trained with a perceptual loss that encourages realistic and sharp results.

The approach can handle large motions and complex occlusions better than previous methods. It can also produce high-quality results for challenging scenarios such as water splashes, fire, smoke, and hair. The approach is fast and efficient, running at 30 frames per second on a single GPU.

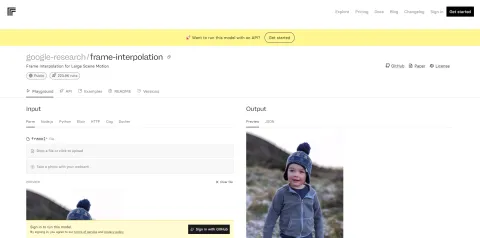

Google Research has made the code and models available on Replicate.com, a platform that allows anyone to run and reproduce machine learning experiments. You can find the project page here: https://replicate.com/google-research/frame-interpolation. You can also watch a video demonstration here: https://www.youtube.com/watch?v=7oQn9yXxOaU.

If you are interested in learning more about frame interpolation and how Google Research is advancing the state-of-the-art in video processing, you can read their paper here: https://arxiv.org/abs/2009.05113. You can also follow their blog and Twitter for more updates and news.