Sentiment Analysis using BERT

What is Sentiment Analysis?

Sentiment Analysis is the process of identifying and understanding the feelings and opinions behind a text. This process utilizes artificial intelligence and natural language processing (NLP) to determine if the text expresses positive, negative, or neutral emotions. Businesses use this process to analyze public feedback on their products.

What is BERT?

BERT stands for Bidirectional Encoder Representations from Transformers. It is a machine learning model specifically designed to handle natural language. BERT excels at understanding the context of words within a sentence. It is based on the transformer architecture, a specific type of neural network architecture.

In this article, we will show how to train BERT, We will use restaurant reviews stored in a CSV file and develop a function to predict whether a review is positive or negative.

Dataset

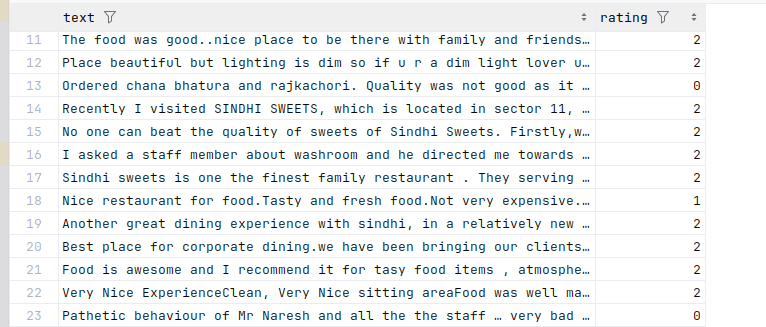

We will use a CSV file containing 2,000 restaurant reviews. Our dataset is already cleaned and preprocessed, as shown in the image below. It has two columns: "text" and "rating." The rating ranges from 0 to 2, where 0 is negative, 1 is neutral, and 2 is positive.

Model Training

We will start by importing the libraries required for our Python code.

# PyTorch library, used for tensor operations and building deep learning models.

import torch

# Imports classes for handling datasets and creating data loaders for batching and shuffling.

from torch.utils.data import Dataset, DataLoader

# Imports the BERT tokenizer, BERT model for sequence classification, and the AdamW optimizer from the Hugging Face transformers library.

from transformers import BertTokenizer, BertForSequenceClassification, AdamW

# Imports a function to split the dataset into training and testing sets.

from sklearn.model_selection import train_test_split

# Imports a function to calculate the accuracy of predictions.

from sklearn.metrics import accuracy_score

# Imports the pandas library for data manipulation and analysis.

import pandas as pdLoading dataset

# Loads a CSV file containing the dataset into a pandas DataFrame.

df = pd.read_csv('reviews.csv')

# Displays the first few rows of the DataFrame to inspect the data.

df.head()Split the dataset into training and testing sets

# Splits the dataset into training (80%) and testing (20%) sets.

train_df, test_df = train_test_split(df, test_size=0.2, random_state=42)

# Selects the 'rating' column from the training DataFrame.

train_df['rating']Define a custom dataset class for loading data

class CustomDataset(Dataset):

def __init__(self, texts, labels, tokenizer, max_len):

self.texts = texts # Stores the texts.

self.labels = labels # Stores the labels.

self.tokenizer = tokenizer # Stores the tokenizer.

self.max_len = max_len # Stores the maximum sequence length.

def __len__(self):

return len(self.texts)

def __getitem__(self, idx):

text = str(self.texts[idx])

label = int(self.labels[idx])

encoding = self.tokenizer.encode_plus(

text,

add_special_tokens=True,

max_length=self.max_len,

return_token_type_ids=False,

padding='max_length',

return_attention_mask=True,

return_tensors='pt',

truncation=True

)

return {

'text': text,

'input_ids': encoding['input_ids'].flatten(),

'attention_mask': encoding['attention_mask'].flatten(),

'label': torch.tensor(label, dtype=torch.long)

}Set up BERT Model and Tokenizer

# Loads a pre-trained BERT Model for sequence classification with 3 labels.

model = BertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=3)

# Loads the BERT tokenizer.

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

# Set device

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

model.to(device)Create DataLoader for training and testing sets

max_len = 128

batch_size = 32

train_dataset = CustomDataset(train_df['text'].values, train_df['rating'].values, tokenizer, max_len)

train_loader = DataLoader(train_dataset, batch_size=batch_size, shuffle=True)

test_dataset = CustomDataset(test_df['text'].values, test_df['rating'].values, tokenizer, max_len)

test_loader = DataLoader(test_dataset, batch_size=batch_size, shuffle=False)Set up optimizer and loss function

optimizer = AdamW(model.parameters(), lr=2e-5)

loss_fn = torch.nn.CrossEntropyLoss()

# Training loop

epochs = 3

for epoch in range(epochs):

model.train()

for batch in train_loader:

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['label'].to(device)

optimizer.zero_grad()

outputs = model(input_ids, attention_mask=attention_mask, labels=labels)

loss = outputs.loss

loss.backward()

optimizer.step()Evaluation

model.eval()

predictions = []

true_labels = []

with torch.no_grad():

for batch in test_loader:

input_ids = batch['input_ids'].to(device)

attention_mask = batch['attention_mask'].to(device)

labels = batch['label'].to(device)

outputs = model(input_ids, attention_mask=attention_mask)

logits = outputs.logits

predictions.extend(torch.argmax(logits, dim=1).cpu().numpy())

true_labels.extend(labels.cpu().numpy())Calculate Accuracy

accuracy = accuracy_score(true_labels, predictions)

print(f'Accuracy: {accuracy}')Save model and tokenizer

model.save_pretrained('/home/ailistmaster/SentimentModel')

tokenizer.save_pretrained('/home/ailistmaster/SentimentModel')Project GitHub Repository